Overview

This project is a collaboration with the GeronTechnology Lab directed by Dr. Mamoun T. Mardini in the Department of Health Outcomes and Biomedical Informatics supported by the UF Informatics Institute (UFII) COVID-19 Response SEED Program. I’m pleased to share that our paper “Detecting Face Touching with Dynamic TimeWarping on Smartwatches: A Preliminary Study” was accepted to ACM ICMI 2021 as a Late-Breaking Results. I’m grateful for the opportunity to share our work virtually (Figure 1) at the ICMI 2021 conference. Here is my pre-recorded talk at ICMI 2021.

Figure 1: The virtual booth at ICMI 2021.

Motivation and Project Goal

Respiratory diseases such as the novel coronavirus (COVID-19) can be transmitted through people’s face-touching behaviors. One of the official recommendations for protecting ourselves from such viruses is to avoid touching our eyes, nose, or mouth with unwashed hands. Therefore, the goal of the project is to explore a potential approach to help users avoid touching their face in daily life by alerting them through a smartwatch application every time a face-touching behavior occurs.

Method

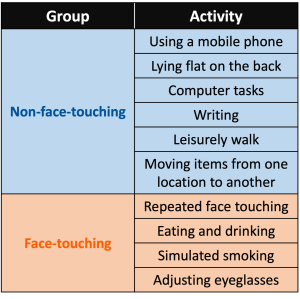

We selected 10 everyday activities (Table 1) including several that should be easy to distinguish from face touching and several that should be more challenging. We recruited 10 participants and asked them to perform each activity repeatedly for 3 minutes at their own pace while wearing a Samsung smartwatch.

Table 1: Everyday physical activities performed by participants.

Based on the collected accelerometer data, we used dynamic time warping (DTW) and Machine Learning (ML) methods (Logistic Regression, SVM, Decision Tree, and Random Forest) to distinguish between the two groups of activities (i.e., face-touching and non-face-touching). For our analysis, the INIT Lab focused on using DTW, which is a method well-suited for small datasets, while the GeronTechnology Lab focused on using ML methods. Interested readers can find the details of our collaborator’s analysis in the paper published in the Sensors journal.

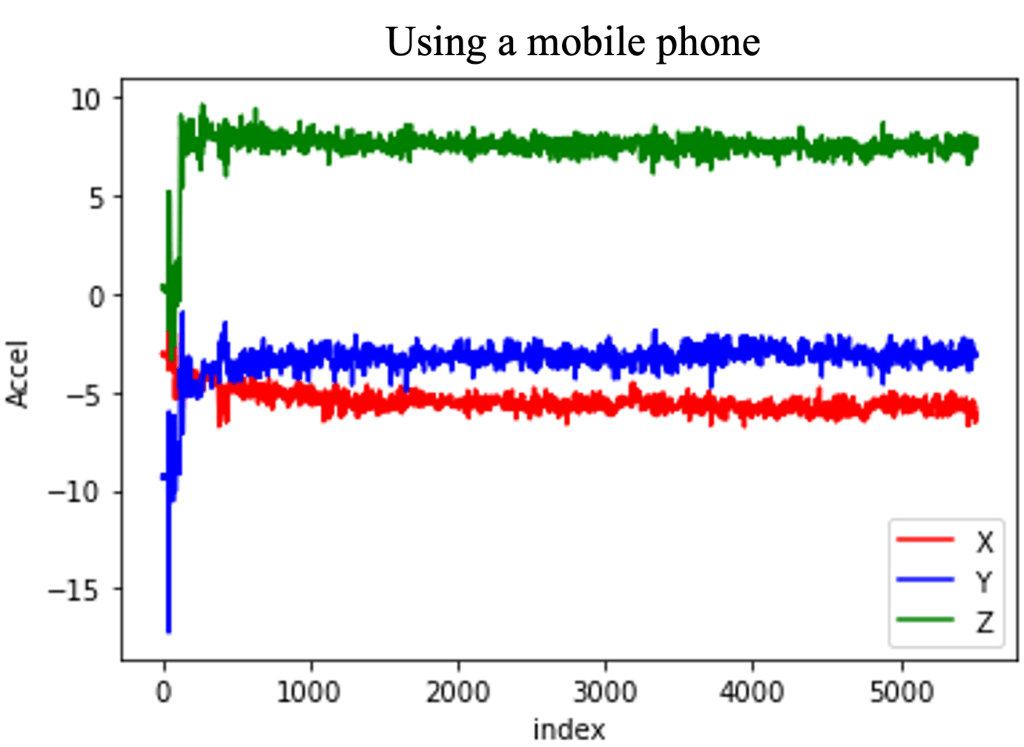

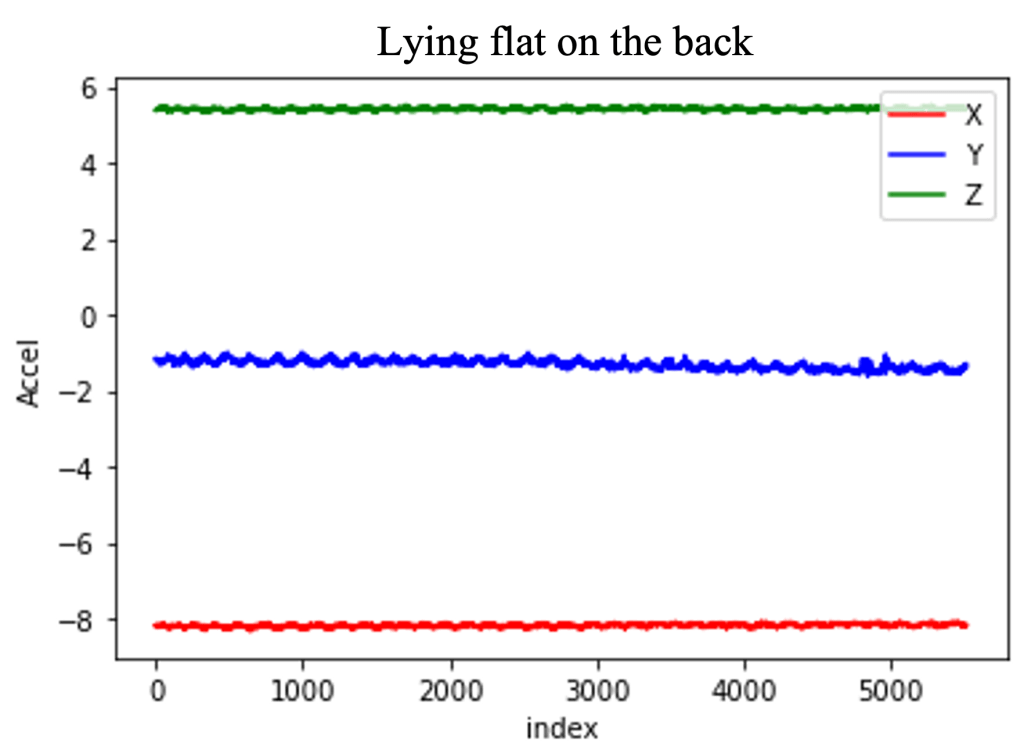

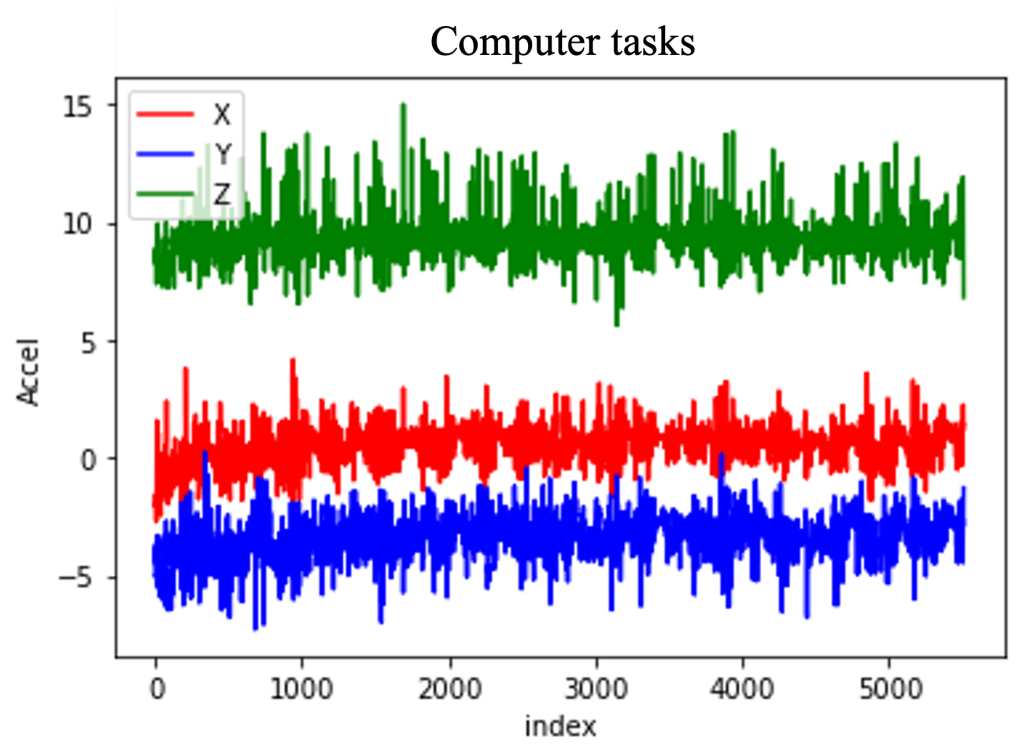

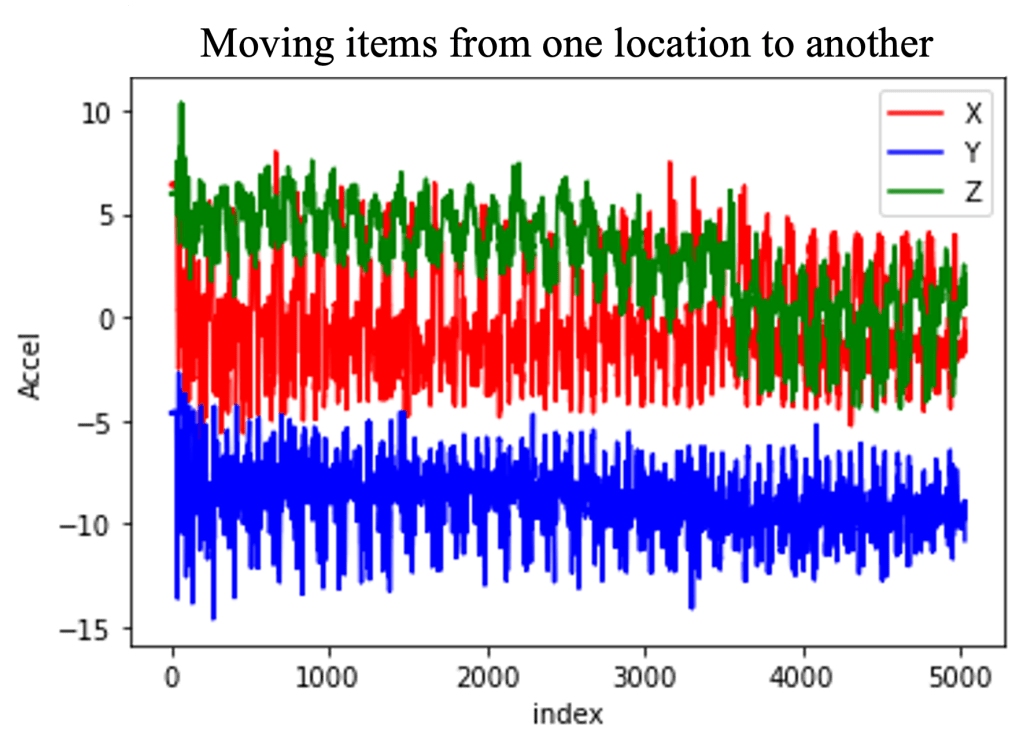

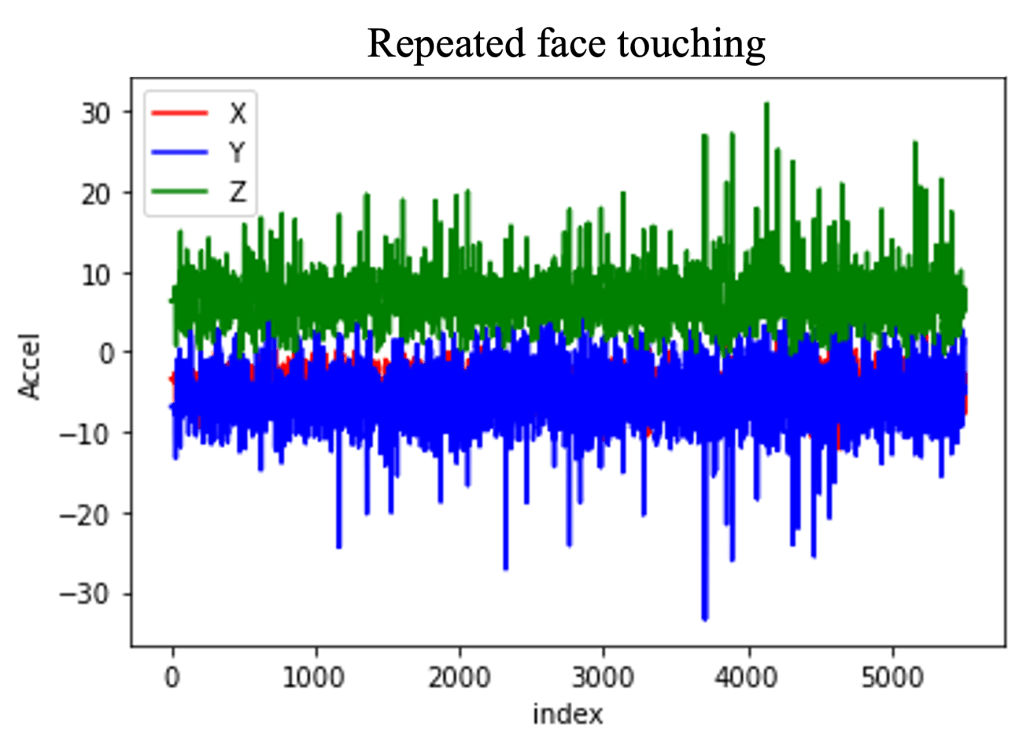

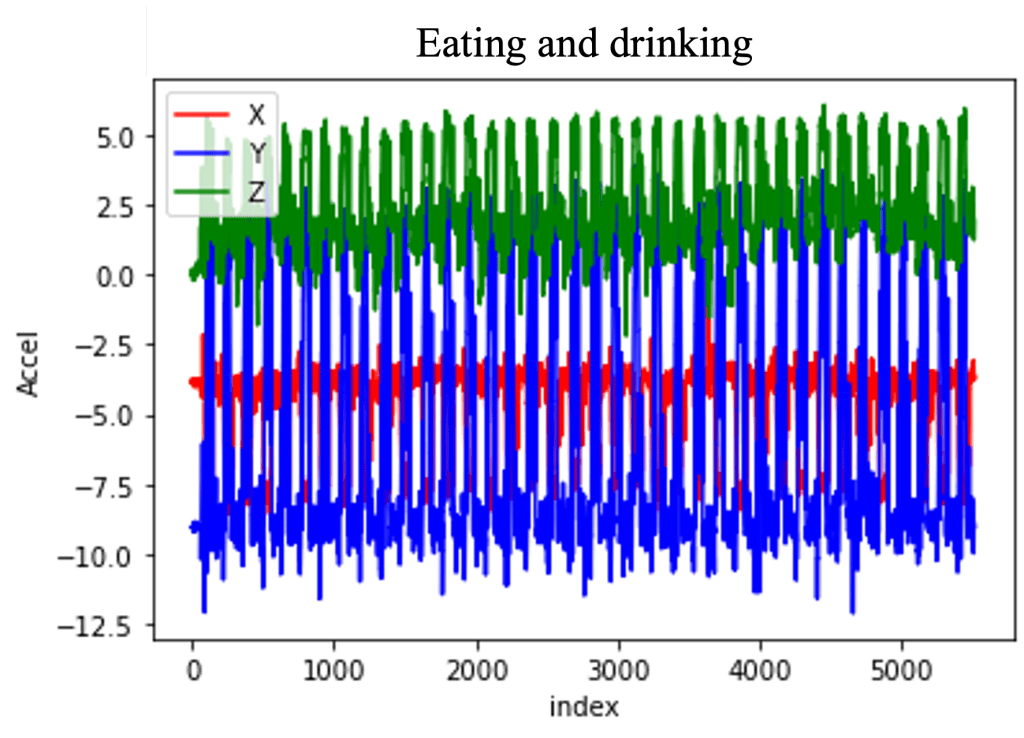

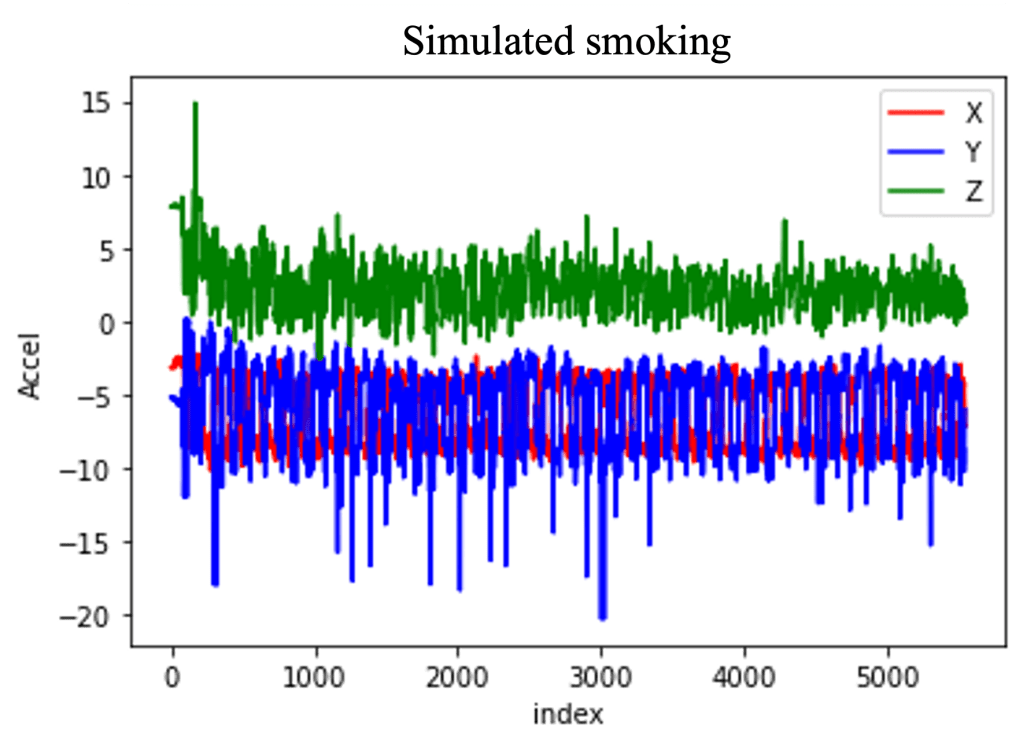

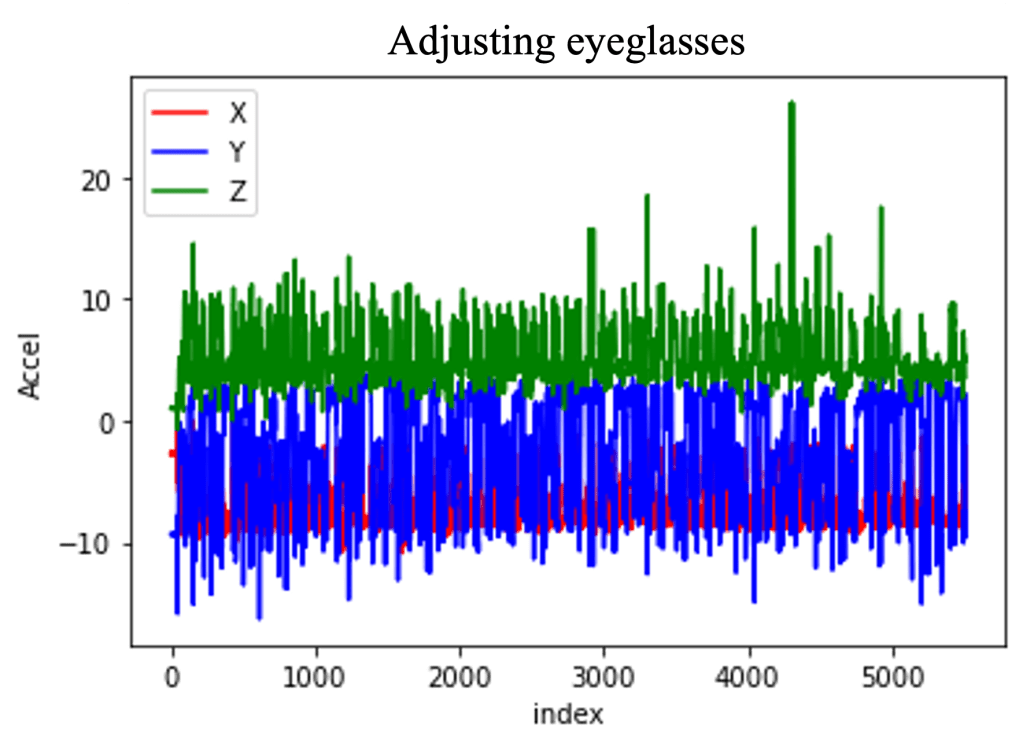

Data Visualization

Face-Touching Activities

Non-Face-Touching Activities

Analysis

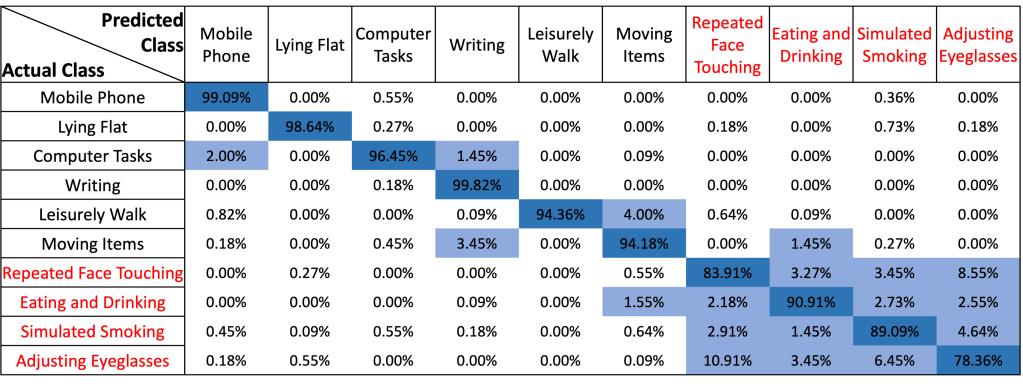

We tested the DTW-based classifier in a user-dependent scenario, in which training and test sets are drawn from the same user (i.e., best-case accuracy), and in a user-independent scenario, in which a set of users are used for training while an additional user is used for testing. Our results showed that recognizing each activity individually (i.e., multiclass classification) is challenging (i.e., 92.48% for the user-dependent scenario and 55.1% for the user-independent scenario). However, our DTW-based classifier can distinguish between face-touching and non-face-touching activities in general with satisfactory accuracies (i.e., 99.07% for the user-dependent scenario and 85.13% for the user-independent scenario). Table 2 shows the confusion matrix in the user-dependent scenario. The “using mobile phone” and “writing” tasks were recognized with accuracies over 99%. However, “adjusting eyeglasses” was recognized with the lowest accuracy of 78.36% and was mainly confused with “repeated face touching”. Regarding the face-touching (red text) and non-face-touching (black text) categories shown in Table 2, there were more within-category confusions than between-category confusions, especially among the activities in the face-touching category.

Table 2: Confusion matrix of individual activity recognition in the user-dependent scenario. Face-touching activities are colored in red. The matrix elements highlighted with deep blue indicate correct classifications while the elements highlighted with light blue indicate relatively high rates of confusions (i.e., larger than 1.00%).

Takeaways

- Smartwatches have the potential for achieving our health goal as well as other goals including quitting smoking and treating the hair-pulling disorder.

- Multiclass classification is challenging, while formulating the problem as a binary classification problem significantly increases the recognition accuracy.

- DTW has the potential to provide a personalized face-touching detection service on resource-constrained devices such as smartwatches.

Files