Overview

The INIT Lab and Ruiz HCI Lab have been collaborating with Dr. Pavlo Antonenko from the College of Education at UF to investigate the relationship between children’s cognitive development and their touchscreen interactions. We are pleased to share that our paper “Examining the Link between Children’s Cognitive Development and Touchscreen Interaction Patterns” was accepted as a short paper to ACM ICMI 2020. I’m grateful for the opportunity to share our work virtually at the ICMI 2020 conference. Here is my pre-recorded talk.

Motivation and Project Goal

It is well established that children’s touch and gesture interactions on touchscreen devices are different from those of adults, with much prior work showing that children’s input is recognized more poorly than adults’ input. In addition, researchers have shown that recognition of touchscreen input is poorest for young children and improves for older children when simply considering their age; however, individual differences in cognitive and motor development could also affect children’s input. An understanding of how cognitive and motor skill influence touchscreen interactions, as opposed to only coarser measurements like age and grade level, could help in developing personalized and tailored touchscreen interfaces for each child.

Method

To investigate how cognitive and motor development may be related to children’s touchscreen interactions, we conducted a study of 28 participants ages 4 to 7 that included (1) validated assessments of the children’s motor and cognitive skills as well as (2) typical touchscreen target acquisition and gesture tasks. Our study was composed of two phases.

Phase 1: NIH Toolbox

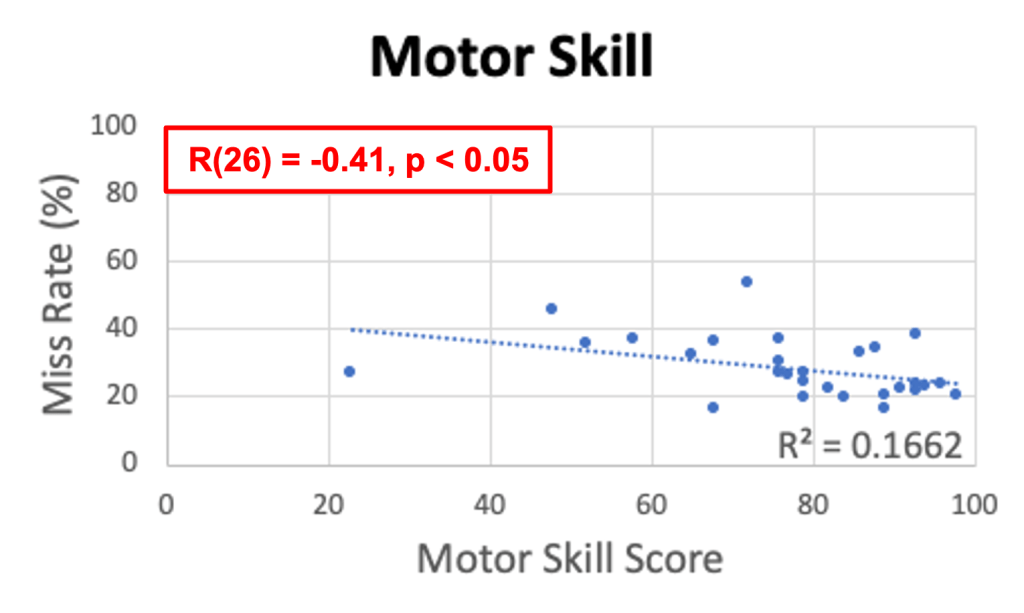

In the first phase, participants used an iPad to complete two tasks using the NIH Toolbox® application suite: (1) the 9-hole Pegboard Dexterity Test, and (2) the Dimensional Change Card Sort Test. These two tasks provide assessments of participants’ fine motor skill level and executive function and attention level, respectively.

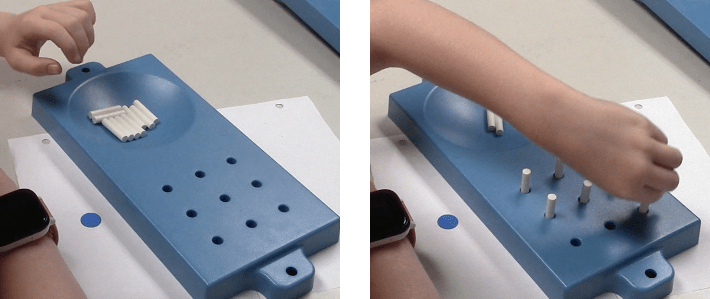

(1) The 9-hole Pegboard Dexterity Test

This is a test of a user’s manual dexterity with their fingers. In the test, participants are asked to place 9 pegs into a board of 9 holes, and then remove them as quickly as possible.

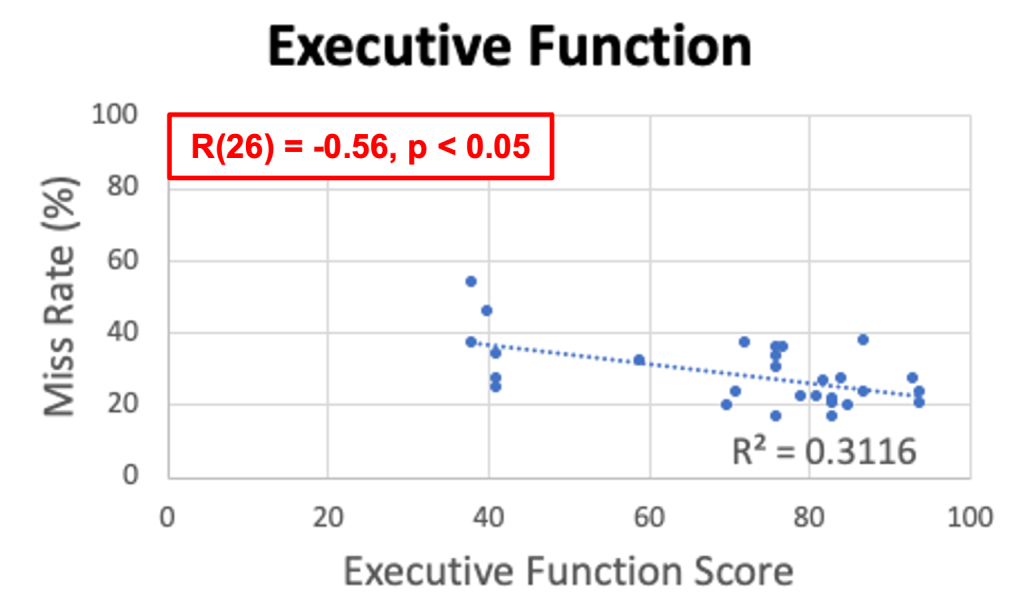

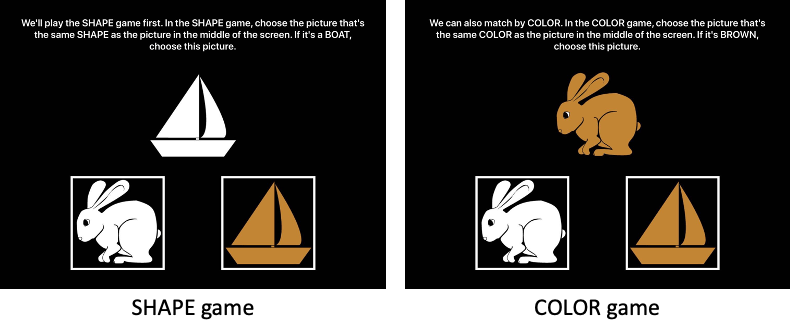

(2) The Dimensional Change Card Sort Test

This test provides a measure of a participant’s executive function, which NIH defines as “the capacity to plan, organize, and monitor the execution of behaviors that are strategically directed in a goal-oriented manner.” In the test, the participant is shown a series of two images and asked to select one that matches either a given color or a given shape.

Phase 2: Target and Gesture Applications

In the second phase, the participants completed touchscreen interaction tasks directly inspired by prior work. The phase included two tasks: (1) a target touching task, and (2) a gesture drawing task.

(1) Target Touching

In this task, participants were asked to touch a series of 104 different targets, which appeared as blue squares on the screen. The size of the targets varied, including very small (0.125 in), small (0.25 in), medium (0.375 in), and large (0.5 in).

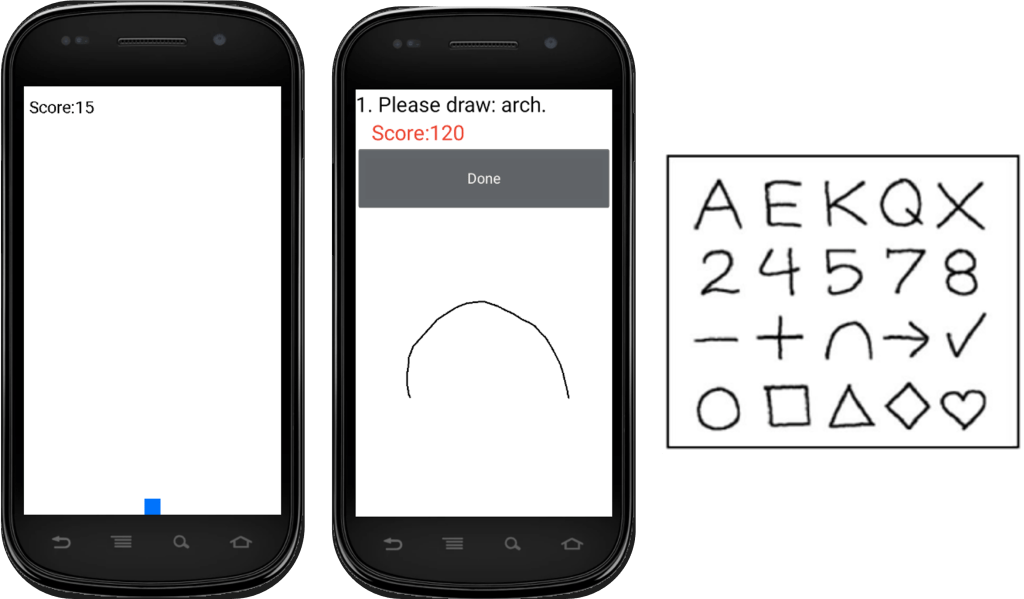

(2) Gesture Drawing

This task consists of a blank canvas on which participants were instructed to draw a series of gestures. We used a gesture set from prior work consisting of 20 total gesture types: letters (A, E, K, Q, X), numbers (2, 4, 5, 7, 8), symbols (line, plus, arch, arrowhead, checkmark), and shapes (circle, rectangle, triangle, diamond, heart). The set was originally selected based on a survey of psychological and developmental literature as well as mobile applications for children.

Analysis

We used the Pearson correlation test in R to investigate the relationship between four independent variables (age, grade level, motor skill, and executive function) and two dependent variables (target miss rate and gesture recognition rate). Our findings indicate that (1) all four factors show significant but moderate negative correlations with the target miss rate and that (2) all four factors show significant but moderate positive correlations with the target miss rate.

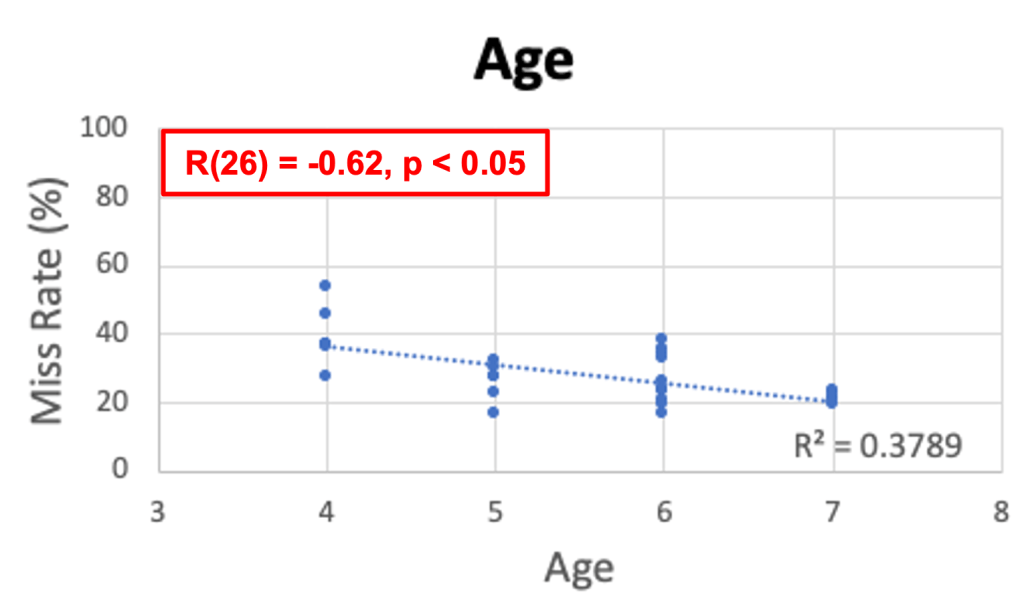

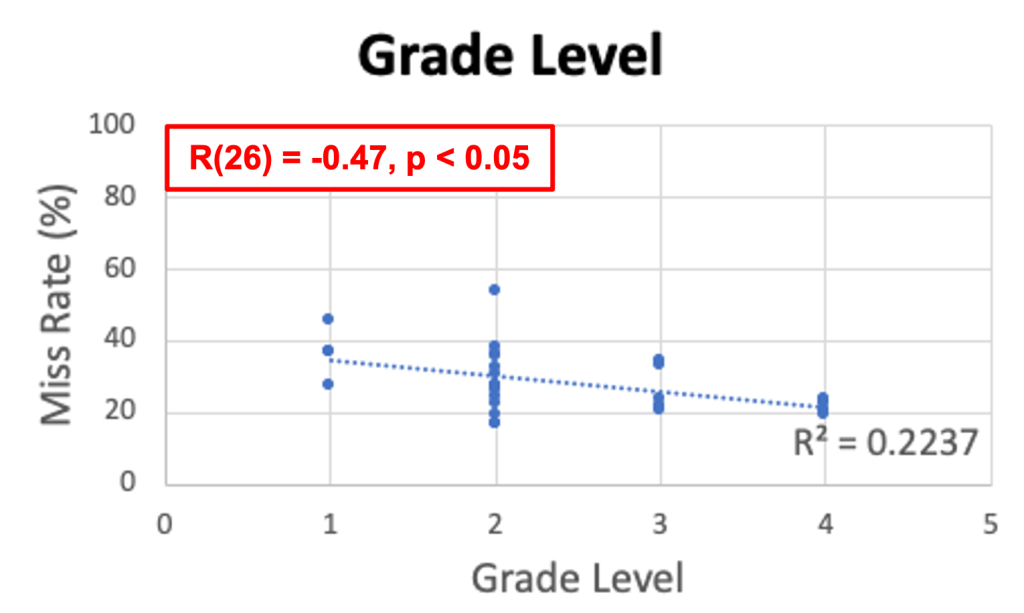

(1) Target Miss Rate

We measure the target miss rate by calculating the proportion of a user’s misses over all targets, as in prior work. We exclude holdovers, which are touches in the location of a target that has just disappeared because the user successfully touched it, but did not realize it. We found significant but moderate negative correlations between the target miss rate and all independent variables.

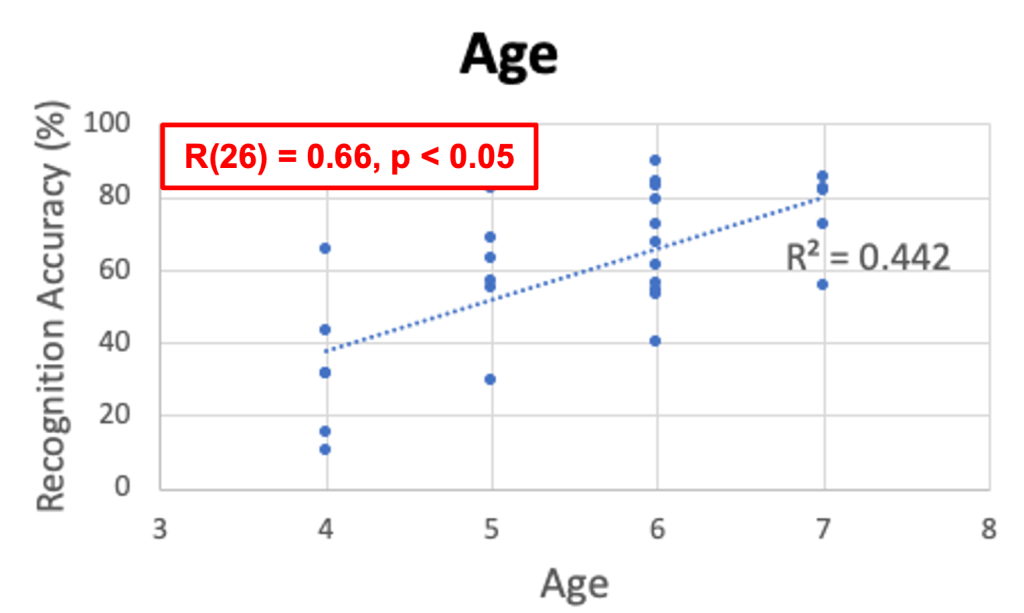

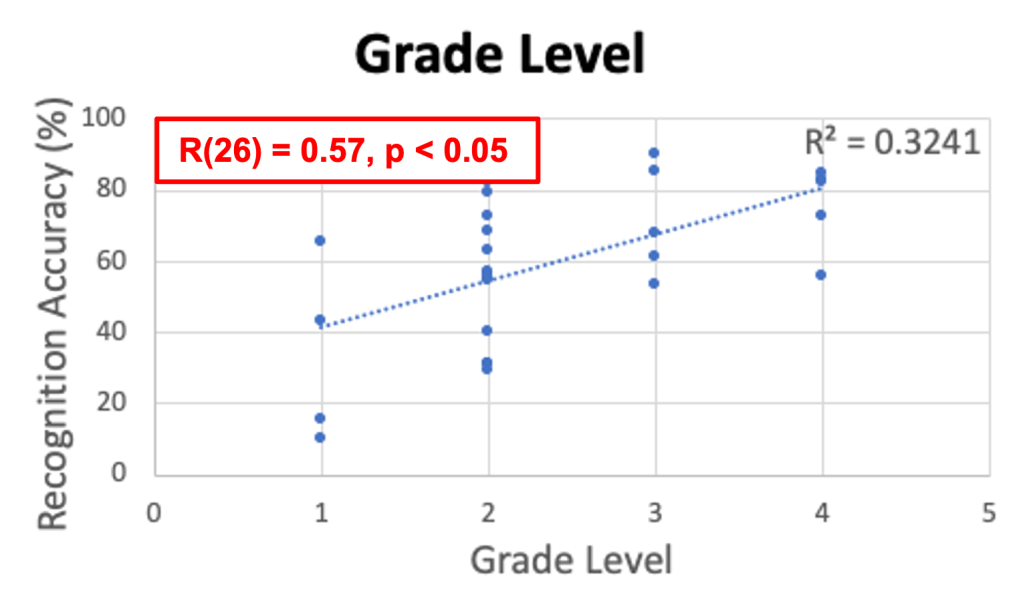

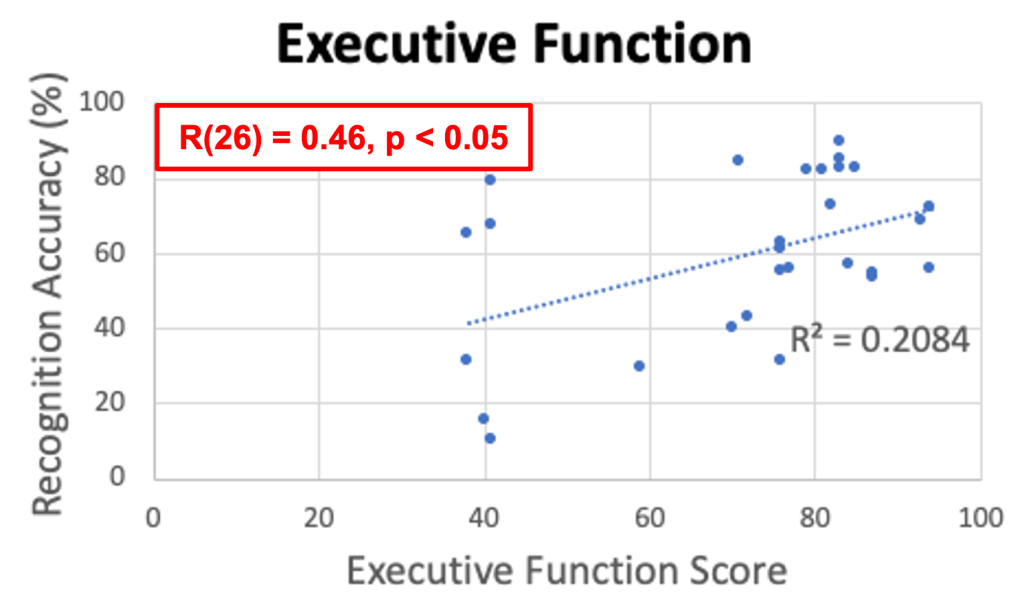

(2) User-Dependent Recognition Rate

To measure automatic recognition rates of the children’s touchscreen gestures, we used the $P recognizer to make our work comparable to prior work on children’s gestures. We used Wobbrock et al.’s procedure for systematic testing in a user-dependent scenario. In the user-dependent scenario, the recognizer is trained on gestures from the same user as it is tested on. We found significant but moderate positive correlations between gesture recognition rate and all independent variables.

Takeaways

(1) Using motor skill and executive function as a lens through which to examine touchscreen interactions does not provide much additional nuance beyond simply looking at age or grade level as prior work has done. Low motor skill will generally predict poor performance compared to high motor skill, but the same can be said for the relationship between age and performance and between grade level and performance.

(2) It is reasonable for researchers to use age or grade level as a proxy for developmental level when studying children’s touchscreen interactions, especially given the additional overhead incurred by measuring children’s cognitive and motor skills.

Files